Why does this look so HORRIBLE? Who is in charge of your design? Have you done any A/B testing? Do you want to be taken seriously?

google redesigns its SERPs (to make ads less obvious)

In an apparent move intended to be evil, Google have just rolled out a new SERP interface. First impressions are: Although it seems to have more space, it also seems to make everything harder to read Ads are very much more ‘discreet’ (ie they blend in with the ‘organic’ listings) The Goooooooooogle pagination no longer […]

Where do the coordinates in Oblivion take you? To the house of the visual effects supervisor

I watched the Tom Cruise Sci-Fi movie, Oblivion the other day and wondered, in a geeky sort of way, where the co-ordinates that flash up on a computer screen transpose to. Set in a dystopian future, a shuttle falls to earth at a long/lat of 41.146576,-73.975739. And that equates to 17 Mein Drive. Now, if I were […]

Just how accurate is Alexa? We compare Google Analytics to Amazon’s ‘Web Information Company’

Alexa vs Reality I’d always wondered how closely Alexa’s traffic graphs mirror reality. In a recent article on how the Sun’s traffic was diving uncontrollably, I used an Alexa comparison graph to illustrate my point but I’d never really put the time in to measure its statistics. I think it’s about time that I did. […]

“I want that tested on every browser” What, all 4.5k of them?

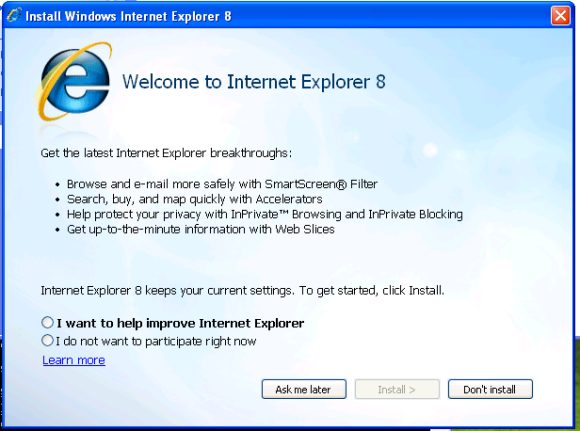

I was looking through Google Analytics stats recently to see how many poor old sods there are who still use IE8 and it surprised me that the audience share of the Internet Explorer suite of browsers was less than 11%. The reason that surprised me was that IE was still in the top 3 browsers […]

I can’t believe I’m saying this, but please don’t forget about IE8 this winter

When OK! Magazine relaunched its website recently, I wasn’t expecting much. Another (somewhat) high profile responsive site that completely disintegrates in IE6, IE7 & IE8. Remember that for users of windows XP, IE8 is the latest Internet Explorer browser that they can access and is still the 2nd most popular IE browser, with 25% of […]

Problem with New Relic and Content-Length Workaround Solution

We had a problem today with a 3rd party aggregator, News Now, who have been using wget to scrape the Daily Express site to gather content. All of a sudden their files were ending prematurely by around 100 characters. I tried it myself with curl and reproduced the same problem, but there were a […]

Problem Solved: iPad / iPhone not allowing playback of YouTube videos

The latest in a series of Eureka moments concerning deviously tricky problems, this one drove me nuts. But it’s over now. Monster’s gone. Think of this as therapy. The Problem One of our clients (The Daily Express) had an issue affecting YouTube videos on their site. When they embedded the new(-ish) iFrame code on […]

The Sun gets spanked by google for cloaking

A few weeks ago I wrote an article on how The Sun allowed GoogleBot to access its site. It appears that this was in contravention of Google’s terms and News International have subsequently revoked GoogleBot’s privileged access, which has resulted in no more access for sneaky chaps like me and a further plummet in traffic for […]

iOS7 / onBlur, onChange and alerts will bring your site down

Seems that iOS 7 has had a bit of a mixed review since its launch less than a week ago, but today I came across an issue which will bring your site down for iOS 7 users in certain circumstances. Select Boxes now ignore onBlur The site we had the problem with is a car warranty […]